Summary: I've updated my custom search system to route some queries to GPT-3.5-turbo using the OpenAI API, while still routing others to different search engines. If you just want to see the script, you can check it out here, or you can try a demo below. Read on for more on why I did this.

A theory of search types

In my last effort to build a custom search experience, I categorized my web searches into two types:

- One type is when I know exactly what page I want to visit, but don't know what the URL is. I called these page searches.

- An example of a page search would be if I wanted to go to the IMDb page for Tom Hanks. To find it, I might enter

tom hanks imdbinto my search engine of choice, or go to the IMDb homepage and search fortom hanks.

- An example of a page search would be if I wanted to go to the IMDb page for Tom Hanks. To find it, I might enter

- The other is when I am looking for some content or information, but don't know what page it might be on—I called these content searches.

- Example: if I can't remember a certain regex pattern, I might search something like "regex to match space or start or end of string", and find a page explaining the word break operator

\b.

- Example: if I can't remember a certain regex pattern, I might search something like "regex to match space or start or end of string", and find a page explaining the word break operator

At the time, my solution was to automatically route page searches to the first search result (à la "I'm Feeling Lucky"), and show the full results page for content searches. You can read more about my original solution here.

Using LLMs for content searches

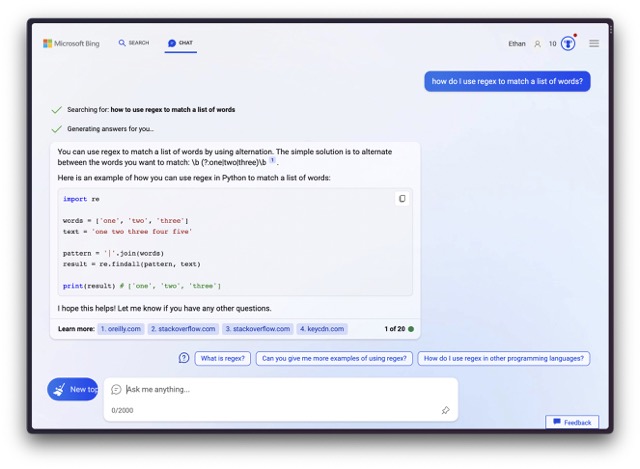

Back in January 2022, the best way to resolve a content search may have been a traditional search engine, but now we have a powerful new tool at our disposal: large language models (LLMs). Bing Search is a perfect example—here's what I get when I ask it a question about regex:

Perfect—it gives me the exact information I was looking for right away. When I ask Google the same question, I get a bunch of relevant Stack Overflow answers, but most of them aren't quite the same thing as the question I asked, and I have to wade through a few links to find the right information. Here, the LLM definitely gets me the information I need faster.

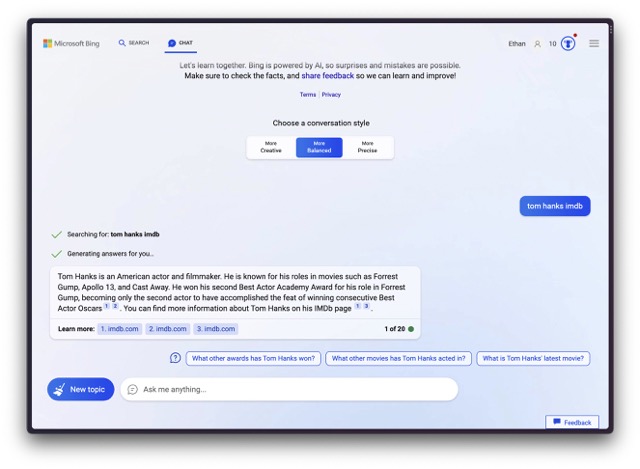

So Bing Search, ChatGPT, etc. can be pretty useful for content searches. Awesome! But right now, they can't help much with page searches. Here's what happens if I just type in "tom hanks imdb":

At least it gives me a link—ChatGPT just tells me it can't access the internet and then gives me a brief bio of Tom Hanks. It even ends with "For the most recent information, you would need to visit Tom Hanks' IMDb page." Hmm.

What's more, most of today's LLM tools don't work with the omnibox—you have to navigate to the website for ChatGPT/Bing Search/etc to use them, which sort of makes it faster just to use your existing search engine in most cases.

Updating my ideal search system

What I'd really like is for my browser's omnibox to take a query, figure out if I'm searching for a specific page or for content, and then route my query to the right tool (e.g. an LLM or a search engine). Hey, this sounds a lot like the custom search script I was talking about earlier!

That system uses a free-tier Cloudflare Worker to catch requests and:

- Parse the query from the URL

- Check for any 'bangs'—if there are any, pass the query to DuckDuckGo to handle them (this allows easy site-specific searching)

- Check for any of the words in a filter list (e.g. how, what, error, etc.) - if there are any, pass the query to Google

- Otherwise, append the

!operator and pass the query to DuckDuckGo to return the first search result

Let's modify this system to pass some queries to an LLM.

Routing searches automatically

Which queries should go to the LLM? My intuition is that I'm more likely to want questions or directives answered by an LLM—things like "What are some good gift ideas for Mother's Day?". On the other hand, things I want to go to a search engine will be lists of disconnected words not phrased for a response (like "chinese food near me").

As a rough approximation, it's easy to route anything ending in a question mark to the LLM:

// if it ends in a question mark, pass to GPT

if (query.slice(-1) == "?") {

const queryData = {

"model": "gpt-3.5-turbo",

"messages": [

{"role": "system", "content": "You are a helpful assistant. Please answer the user's questions concisely."},

{"role": "user", "content": query}

],

"temperature": 0.7 };

var response = await postChat(url = "https://api.openai.com/v1/chat/completions", queryData);

var resultData = await response.json();

// display response to user

...

}

LLM-ception: Using GPT to direct the queries

The question mark thing works fine, but some of the queries I want to go to the LLM don't end in question marks—they're directives, like "generate three random words" or "tell me a joke". How can I tell if a string of text is formed as a question or directive, or if it's a jumble of search terms?

Hang on a second—I've got access to a state of the art language model! I bet it can tell me. Let's try asking it to determine where the query goes:

var queryAssess = "Query: " + query + "Answer: "

const assessData = {

"model": "gpt-3.5-turbo",

"messages": [

{"role": "system", "content": "You are a search query categorizer who identifies which queries would be better answered by an agent and which would be better for a search engine. Queries formed as sentences, questions, or directives will usually be better for an agent. Please reply with 'Agent' if the query would be best answered by an Agent and 'Search' if it would be best routed to a search engine. Examples: Query: How do I hang a picture? Answer: Agent Query: chinese food near me Answer: Search Query: javascript how to slice a string Answer: Search Query: give me some ideas for Mother's Day presents Answer: Agent"},

{"role": "user", "content": queryAssess}

],

"temperature": 0.6 };

var assessResponse = await postChat(url = "https://api.openai.com/v1/chat/completions", assessData);

console.log(assessResponse);

var assessResult = await assessResponse.json();

console.log(assessResult);

// if the model returns 'Agent', pass the query to GPT

if (assessResult["choices"][0]["message"]["content"].includes("Agent")) {

// pass to GPT, see above

...

}

This works! However, it ~doubles the time it takes to get a result, and the LLM's decisions don't always agree with what I really want.

In the end, I think it's actually better to have the more predictable system that checks for a question mark. That way, I know if I ask a question I'm getting the LLM, and otherwise I'm getting a search result, making it easier to direct my queries where I want them to go.

If you're interested for the full script, here's the version that checks for a question mark, and here's the alternate version that uses GPT to decide where to route queries. Feel free to adapt either for your own use.

Demo

Try it out! Enter a search or a question below and see where it takes you (should open in a new tab):

As a reminder, here's how it works:

- Bangs work just like they do in DuckDuckGo (e.g. adding !w will search Wikipedia)

- If your search ends in a question mark, it'll get sent to GPT-3.5-turbo

- If your search contains a few keywords like

python,error, etc., you'll see the full Google results - Otherwise, you'll get sent straight to the first match on DuckDuckGo

Results

This is a nice addition to my previous system. It lowers the barrier to engage with an LLM, since I can just type questions into my omnibox. However, with GPT-3.5, the answers aren't always that helpful—I'm still learning what good things are to ask. Of course I could use GPT-4 with this system, but it would cause a longer delay. I'm still weighing that as an option.

One easter egg: since I haven't gotten around to sanitizing the responses, you can do some roundabout content injection through searches. For example, try asking "can you write some CSS <style> tags to turn the background of a page red?" or "can you write some Javascript in <script> tags to make the background of a page blink?" (I discovered this on accident.)

Closing thoughts

My biggest takeaway is that the future of search won't be just LLMs—I'd expect it to involve more traditional search elements as well. For some searches ("chinese food near me", "green high heels", etc.) it's simply better to see a list of results. And many searches will still be for particular pages (my "tom hanks imdb" example).

Really, this approach seems like it should exist within a product like Bing Search, or even better, in the browser itself. My dream for how this would be implemented is inspired by Arc—imagine every search taking you directly to the page or content you're most likely to want, with simple keyboard shortcuts that can replace that with the full results page or an LLM if you were looking for something different.

In the end, I'm hoping the LLM revolution gives rise to some more innovative search experiences that go beyond just sticking AI-generated responses at the top of a traditional results page. ⧈