Summary: I believe the future of AI won't be one godlike superintelligent model, but thousands of nearly-free micro-agents constantly meeting hyper-specific needs.

Here's Roy Longbottom on the growth of computing power:

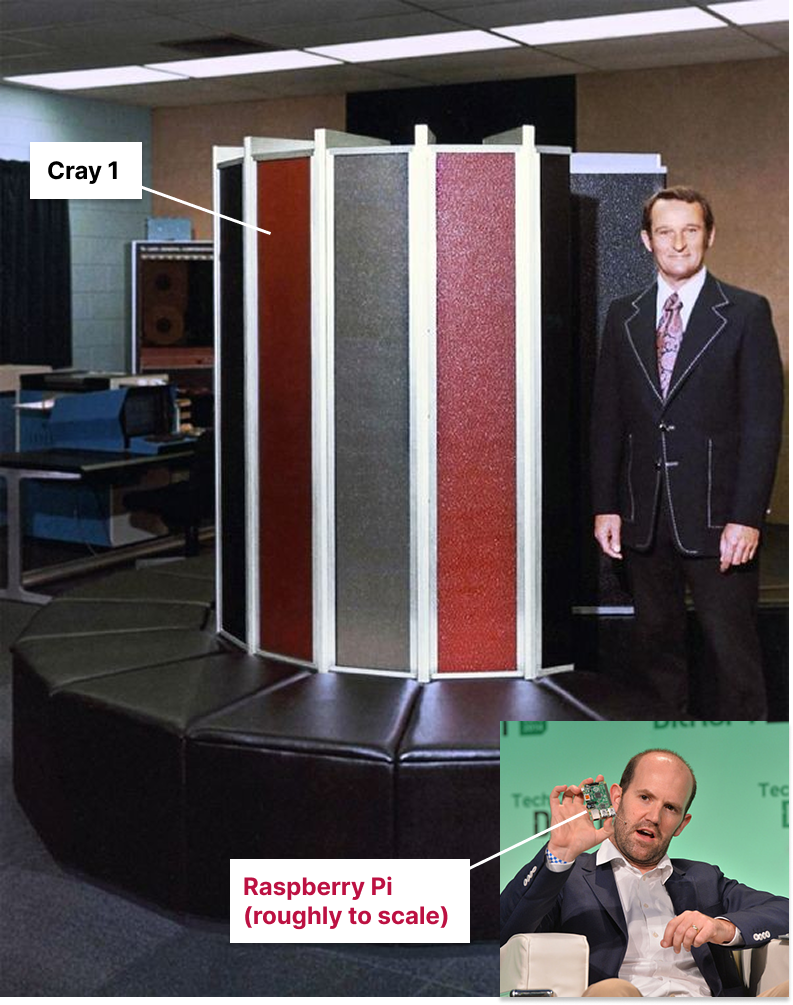

"In 1978, the Cray 1 supercomputer cost $7 Million, weighed 10,500 pounds and had a 115 kilowatt power supply. It was, by far, the fastest computer in the world. The Raspberry Pi costs around $70 (CPU board, case, power supply, SD card), weighs a few ounces, uses a 5 watt power supply and is more than 4.5 times faster than the Cray 1." Source

That passage refers to the original Raspberry Pi. I have a Raspberry Pi Model 3B, now several years old, which I've used for various projects but mostly now just sits around collecting dust. It's dozens of times more powerful than the Cray 1.

The Cray 1, which included built-in seating, compared to a Raspberry Pi

In 1960, it would have been nearly inconceivable to think that everyone would have a computer in their home. In 1980, it would have been crazy to think that most people would have several computers, each more powerful than a contemporary supercomputer. But today, we have dozens — devices like phones and smart watches, of course, but also the chips embedded in our cars, TVs, appliances, children's toys, toothbrushes, and more.

Even our toilets have computers in them

Scale over size

It's worth noting, of course, that the performance of cutting-edge computers has also massively improved. Today's state-of-the-art computers are exponentially more powerful than a Raspberry Pi. And even an everyday modern laptop has 100,000-1,000,000x more computing power than something like a TRS-80.

But I'd argue this top-line growth doesn't really matter that much to the average consumer's day-to-day life. To most people, who use their most powerful computers to browse the web, use cloud services, and maybe do some word processing, the last 10-15 years of improvement haven't resulted in a meaningfully different user experience.

What's been transformational is how small these computers got, resulting in entirely new products like the smartphone, and also how cheap simpler computer chips became, enabling all sorts of new features for everything from cars to toasters. People may not even realize that some of these products have computer chips, but they interact with those features every day.

I think something similar is starting to happen with language models.

Superintelligence versus super-accessible intelligence

When I read discussion about the AI future, I tend to see focus on single all-powerful models — paperclip maximizers, or superhuman self-improving coding agents, or a version of Siri that can reliably set a timer (zing). Even a scenario as detailed as AI 2027 seems focused almost entirely on the capabilities of the most powerful models and how they might evolve over time.

To me, this is thinking about the future all wrong, in the same way that someone who saw a Cray 1 in the 70s and imagined the future of computing being a few dozen Cray 50s would have been wrong.

I don't think the future will look like one godlike superintelligent AI model that can answer anything we ask. Instead, it will be ten thousand micro-agents simultaneously meeting hyper-specific needs. It will be agents that are so cheap they can run in the background, continuously monitoring and ready to jump into action, without meaningful expense to the user. It will be tiny AI models embedded into everything from children's toys to TVs to make them more intelligent and interactive.

Imagine those greeting cards that play music when you open them, but they're interactive and can have a conversation with you

Envisioning the micro-agent future

So what might that version of the AI future look like — where modern LLM performance is nearly free and widely available on all kinds of systems? Here are a few ideas:

AI systems could prevent prompt engineering by having 100 micro-agents review inputs and outputs, each tuned to look for different malicious behavior. That could still leave hundreds of agents to process user requests.

Cheap agents could run constantly "behind the scenes" of user systems to remove anything that looks like an ad from a webpage, reviewing both the raw HTML and what's visually displayed to the user. These agents could even watch videos ahead of time to identify where the ads will be to mute or skip them (a man can dream, can't he?).

Micro-agents could proactively propose responses to emails, texts, and more — an agent for everything from making appointments, to paying bills, to drafing presentations.

A revolution in progress

Even if top-end progress feels like it's slowing down, there is the potential for massive disruption simply as today's models get cheaper and more widely available. And I'd argue we're starting to see some early signs.

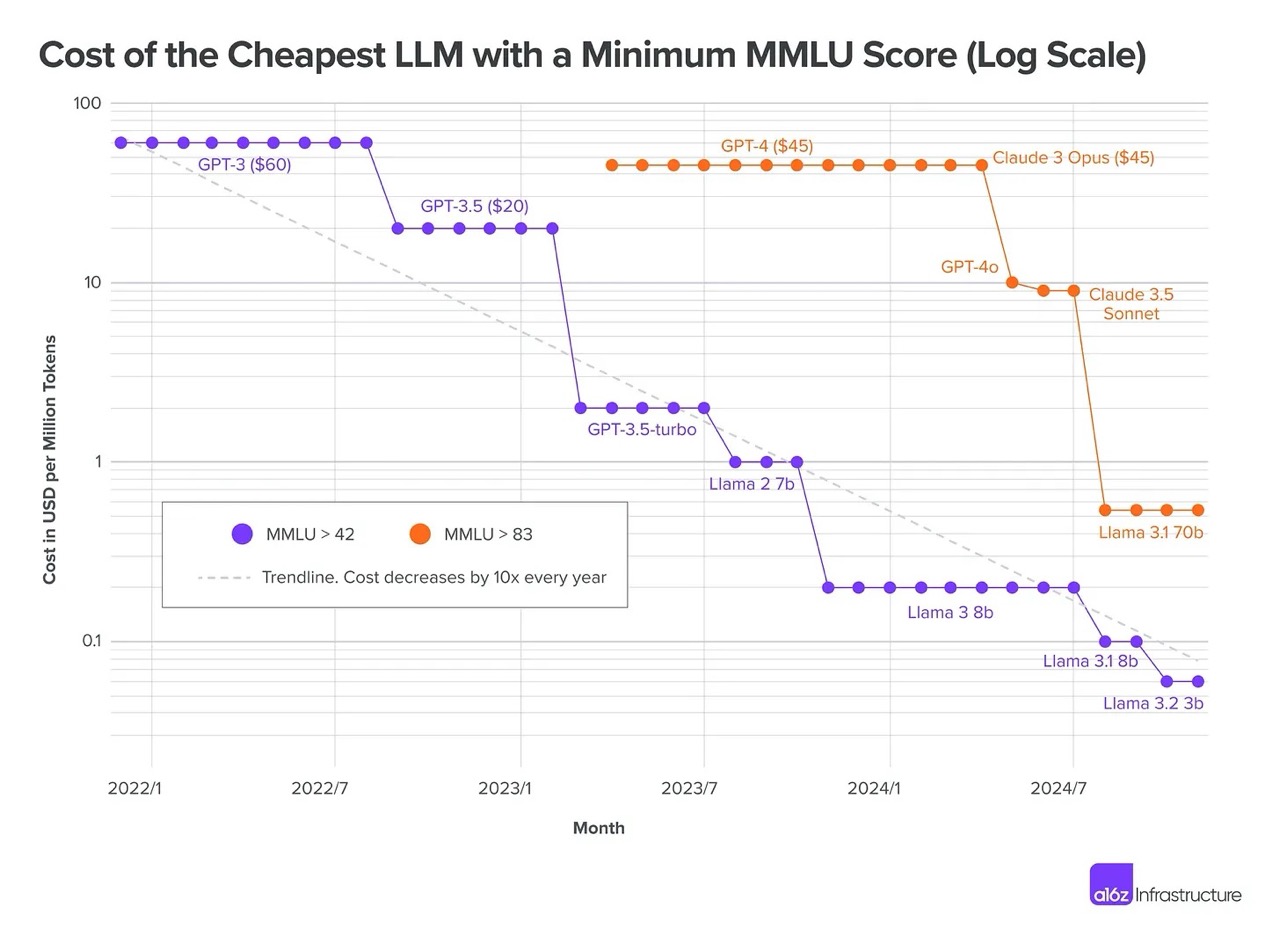

Already, costs have come down significantly for the same level of output. Models with powerful coding capability can now be run on consumer laptops. And NVIDIA researchers are declaring that "small language models" are the future of agentic AI.

The cost per token of equivalent LLM performance has decreased drastically (A16Z)

It's hard to know what the world's most powerful AI will be capable of in 10 or 20 years. But I'm confident making a related prediction: current-day LLM performance will be widely and cheaply available for anyone to run as many instances as they want or need. That's what I'd bet on for a revolution. ⧈